The recent DNS challenges for a large organization that impacted their worldwide customers bring to mind a project we completed this year, a global password reset redundancy solution.

We worked with a client who desired to manage unplanned WAN outages to their five (5) data centers for three (3) independent MS Active Directory Domains with integration to various on-prem applications/ endpoints. The business requirement was for self-service password sync, where the users’ password change process is initialed/managed by the two (2) different MS Active Directory Password Policies.

Without the WAN outage requirement, any IAM/IAG solution may manage this request within a single data center. A reverse password sync agent process is enabled on all writable MS Active Directory domain controllers (DC). All the world-wide MS ADS domain controllers would communicate to the single data center to validate and resend this password change to all of the users’ managed endpoint/application accounts, e.g. SAP, Mainframe (ACF2/RACF/TSS), AS/400, Unix, SaaS, Database, LDAP, Certs, etc.

With the WAN outage requirement, however, a queue or components must be deployed/enabled at each global data center, so that password changes are allowed to sync locally to avoid work-stoppage and async-queued to avoid out-of-sync password to the other endpoint/applications that may be in other data centers.

We were able to work with the client to determine that their current IAM/IAG solution would have the means to meet this requirement, but we wished to confirm no issues with WAN latency and the async process. The WAN latency was measured at less than 300 msec between remote data centers that were opposite globally. The WAN latency measured is the global distance and any intermediate devices that the network traffic may pass through.

To review the solution’s ability to meet the latency issues, we introduced a test environment to emulate the global latency for deployment use-cases, change password use-cases, and standard CrUD use-cases. There is a feature within VMWare Workstation, that allows emulation of degraded network traffic. This process was a very useful planning/validation tool to lower rollback risk during production deployment.

The solution used for the Global Password Rest solution was Symantec Identity Suite Virtual Appliance r14.3cp2. This solution has many tiers, where select components may be globally deployed and others may not.

We avoided any changes to the J2EE tier (Wildfly) or Database for our architecture as these components are not supported for WAN latency by the Vendor. Note: We have worked with other clients that have deployment at two (2) remote data centers within 1000 km, that have reported minimal challenges for these tiers.

We focused our efforts on the Provisioning Tier and Connector Tier. The Provisioning Tier consists of the Provisioning Server and Provisioning Directory.

The Provisioning Server has no shared knowledge with other Provisioning Servers. The Provisioning Directory (Symantec Directory) is where the provisioning data may be set up in a multi-write peer model. Symantec Directory is a proper X.500 directory with high redundancy and is designed to manage WAN latency between remote data centers and recovery after an outage. See example provided below.

The Connector Tier consists of the Java Connector Server and C++ Connector Server, which may be deployed on MS Windows as an independent component. There is no shared knowledge between Connector Servers, which works in our favor.

Requirement:

Three (3) independent MS Active Directory domain in five (5) remote data centers need to allow self-service password change & allow local password sync during a WAN outage. Passwords changes are driven by MS ADS Password Policies (every N days). The IME Password Policy for IAG/IAM solution is not enabled, IME authentication is redirected to an ADS domain, and the IMPS IM Callback Feature is disabled.

Below is an image that outlines the topology for five (5) global data centers in AMER, EMEA, and APAC.

The flow diagram below captures the password change use-case (self-service or delegated), the expected data flow to the user’s managed endpoints/applications, and the eventual peer sync of the MS Active Directory domain local to the user.

Observation(s):

The standalone solution of Symantec IAG/IAM has no expected challenges with configurations, but the Virtual Appliance offers pre-canned configurations that may impact a WAN deployment.

During this project, we identified three (3) challenges using the virtual appliance.

Two (2) items needed the assistance of the Broadcom Support and Engineering teams. They were able to work with us to address deployment configuration challenges with the “check_cluster_clock_sync -v ” process that incorrectly increments time delays between servers instead of resetting a value of zero between testing between servers.

Why this is important? The “check_cluster_clock_sync” alias is used during auto-deployment of vApp nodes. If the time reported between servers is > 15 seconds then replication may fail. This time check issue was addressed with a hotfix. After the hot-fix was deployed, all clock differences were resolved.

The second challenge was a deployment challenge of the IMPS component for its embedded “registry files/folders”. The prior embedded copy process was observed to be using standard “scp”. With a WAN latency, the scp copy operation may take more than 30 seconds. Our testing with the Virtual Appliance showed that a simple copy would take over two (2) minutes for multiple small files. After reviewing with CA support/engineering, they provided an updated copy process using “rsync” that speeds up copy performance by >100x. Before this update, the impact was provisioning tier deployment would fail and partial rollback would occur.

The last challenge we identified was using the Symantec Directory’s embedded features to manage WAN latency via multi-write HUB groups. The Virtual Appliance cannot automatically manage this feature when enabled in the knowledge files of the provisioning data DSAs. Symantec Directory will fail to start after auto-deployment.

Fortunately, on the Virtual appliance, we have full access to the ‘dsa’ service ID and can modify these knowledge files before/after deployment. Suppose we wish to roll back or add a new Provisioning Server Virtual Appliance. In that case, we must disable the multi-write HUB group configuration temporarily, e.g. comment out the configuration parameter and re-init the DATA DSAs.

Six (6) Steps for Global Password Reset Solution Deployment

We were able to refine our list of steps for deployment using pre-built knowledge files and deployment of the vApp nodes in blank slates with the base components of Provisioning Server (PS) and Provisioning Directory) with a remote MS Windows server for the Connector Server (JCS/CCS).

Step 1: Update Symantec Directory DATA DSA’s knowledge configuration files to use the multiple group HUB model. Note that multi-write group configuration is enabled within the DATA DSA’s *.dxc files. One Directory servers in each data center will be defined as a “HUB”.

To assist this configuration effort, we leveraged a serials of bash shell scripts that could be pasted into multiple putty/ssh sessions on each vApp to replace the “HUB” string with a “sed” command.

After the HUB model is enabled (stop/start the DATA DSAs), confirm that delayed WAN latency has no challenge with Symantec Directory sync processes. By monitoring the Symantec Directory logs during replication, we can see that sync operation with the WAN latency is captured with the delay > 1 msecs between data centers AMER1 and APAC1.

Step 2: Update IMPS configurations to avoid delays with Global Password Reset solution.

Note for this architecture, we do not use external IME Password Policies. We ensure that each AD endpoint has the checkbox enabled for “Password synchronization agent is installed” & each Global User (GU) has “Enable Password Synchronization Agent” checkbox enabled to prevent data looping. To ensure this GU attribute is always enabled, we updated an attribute under “Create Users Default Attributes”.

Step 3a: Update the Connector Tier (CCS Component)

Ensure that the MS Windows Environmental variables for the CCS connector are defined for Failover (ADS_FAILOVER) and Retry (ADS_RETRY).

Step 3b: Update the CCS DNS knowledge file of ADS DCs hostnames.

Important Note: Avoid using the refresh feature “Refresh DC List” within the IMPS GUI for the ADS Endpoint. If this feature is used, then a “merge” will be processed from the local CCS DNS file contents and what is defined within the IMPS GUI refresh process. If we wish to manage the redirection to local MS ADS Domain Controllers, we need to control this behavior. If this step is done, we can clean out the Symantec Directory of extra entries. The only negative aspect is the local password change may attempt to communicate to one of the remote MS ADS Domain Controllers that are not within the local data center. During a WAN outage, a user would notice a delay during the password change event while the CCS connector timed out the connection until it connected to the local MS ADS DC.

Step 3c: CCS ADS Failover

If using SSL over TCP 636 confirm the ADS Domain Root Certificate is deployed to the MS Windows Server where the CCS service is deployed. If using SASL over TCP 389 (if available), then no additional effort is required.

If using SSL over TCP 636, use the MS tool certlm.msc to export the public root CA Certificate for this ADS Domain. Export to base64 format for import to the MS Windows host (if not already part of the ADS Domain) with the same MS tool certlm.msc.

Step 4a: Update the Connector Tier for the JCS component.

Add the stabilization parameter “maxWait” to the JCS/CCS configuration file. Recommend 10-30 seconds.

Step 4b: Update JCS registration to the IMPS Tier

You may use the Virtual Appliance Console, but this has a delay when pulling the list of any JCS connector that may be down at this time of the check/submission. If we use the Connector Xpress UI, we can accomplish the same process much faster with additional flexibility for routing rules to the exact MS ADS Endpoints in the local data center.

Step 4c: Observe the IMPS routing to JCS via etatrans log during any transaction.

If any JCS service is unavailable (TCP 20411), then the routing rules process will report a value of 999.00, instead of a low value of 0.00-1.00.

Step 5: Update the Remote Password Change Agent (DLL) on MS ADS Domain Controllers (writable)

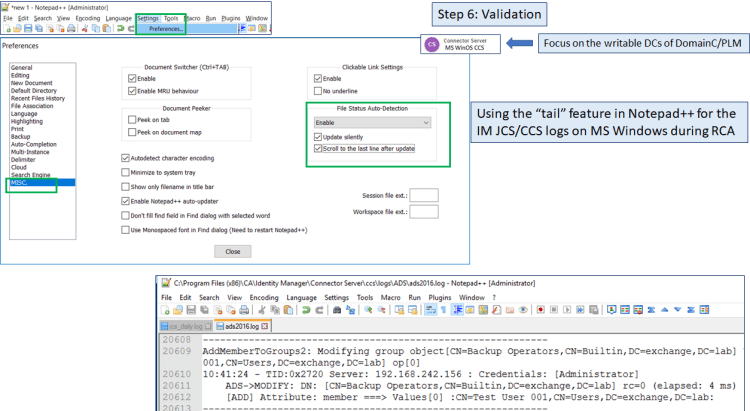

Step 6a: Validation of Self-Service Password Change to selected MS ADS Domain Controller.

Using various MS Active Directory processes, we can emulate a delegated or self-service password change early during the configuration cycle, to confirm deployment is correct. The below example uses MS Powershell to select a writable MS ADS Domain Controller to update a user’s password. We can then monitor the logs at all tiers for completion of this password change event.

A view of the password change event from the Reverse Password Sync Agent log file on the exact MS Domain Controller.

Step 6b: Validation of password change event via CCS ADS Log.

Step 6c: Validation of password change event via IMPS etatrans log

Note: Below screenshot showcases alias/function to assist with monitoring the etatrans logs on the Virtual Appliance.

Below screen shot showcases using ldapsearch to check timestamps for before/after of password change event within MS Active Directory Domain.

We hope these notes are of some value to your business and projects.

Appendix

Using the MS Windows Server for CCS Server Get current status of AD account on select DC server before Password Change: PowerShell Example: get-aduser -Server dc2012.exchange2020.lab "idmpwtest" -properties passwordlastset, passwordneverexpires | ft name, passwordlastset LdapSearch Example: (using ldapsearch.exe from CCS bin folder - as the user with current password.) C:\> & "C:\Program Files (x86)\CA\Identity Manager\Connector Server\ccs\bin\ldapsearch.exe" -LLL -h dc2012.exchange2012.lab -p 389 -D "cn=idmpwtest,cn=Users,DC=exchange2012,DC=lab" -w "Password05" -b "CN=idmpwtest,CN=Users,DC=exchange2012,DC=lab" -s base pwdLastSet Change AD account's password via Powershell: PowerShell Example: Set-ADAccountPassword -Identity "idmpwtest" -Reset -NewPassword (ConvertTo-SecureString -AsPlainText "Password06" -Force) -Server dc2016.exchange.lab Get current status of AD account on select DC server after Password Change: PowerShell Example: get-aduser -Server dc2012.exchange2020.lab "idmpwtest" -properties passwordlastset, passwordneverexpires | ft name, passwordlastset LdapSearch Example: (using ldapsearch.exe from CCS bin folder - as the user with NEW password) C:\> & "C:\Program Files (x86)\CA\Identity Manager\Connector Server\ccs\bin\ldapsearch.exe" -LLL -h dc2012.exchange2012.lab -p 389 -D "cn=idmpwtest,cn=Users,DC=exchange2012,DC=lab" -w "Password06" -b "CN=idmpwtest,CN=Users,DC=exchange2012,DC=lab" -s base pwdLastSet

Using the Provisioning Server for password change event

Get current status of AD account on select DC server before Password Change:

LDAPSearch Example: (From IMPS server - as user with current password)

LDAPTLS_REQCERT=never ldapsearch -LLL -H ldaps://192.168.242.154:636 -D 'CN=idmpwtest,OU=People,dc=exchange2012,dc=lab' -w Password05 -b "CN=idmpwtest,OU=People,dc=exchange2012,dc=lab" -s sub dn pwdLastSet whenChanged

Change AD account's password via ldapmodify & base64 conversion process:

LDAPModify Example:

BASE64PWD=`echo -n '"Password06"' | iconv -f utf8 -t utf16le | base64 -w 0`

ADSHOST='192.168.242.154'

ADSUSERDN='CN=Administrator,CN=Users,DC=exchange2012,DC=lab'

ADSPWD='Password01!’

ldapmodify -v -a -H ldaps://$ADSHOST:636 -D "$ADSUSERDN" -w "$ADSPWD" << EOF

dn: CN=idmpwtest,OU=People,dc=exchange2012,dc=lab

changetype: modify

replace: unicodePwd

unicodePwd::$BASE64PWD

EOF

Get current status of AD account on select DC server after Password Change:

LDAPSearch Example: (From IMPS server - with user's account and new password)

LDAPTLS_REQCERT=never ldapsearch -LLL -H ldaps://192.168.242.154:636 -D 'CN=idmpwtest,OU=People,dc=exchange2012,dc=lab' -w Password06 -b "CN=idmpwtest,OU=People,dc=exchange2012,dc=lab" -s sub dn pwdLastSet whenChanged