You’re probably familiar with those emails that claim to be from your bank, CPA, or services like Microsoft and Google. They use deceptive tactics, such as subtly altered URLs and stolen branding, to trick you into entering your credentials on fraudulent sites. If you’re tired or distracted, it’s easy to fall for these schemes, which can put you at serious risk.

The great advantage and the “beauty” of passkeys is their ability to render these phishing attempts ineffective. By design, passkeys prevent remote phishing through email or text from achieving their goals, ensuring your credentials stay secure.

Why this works?

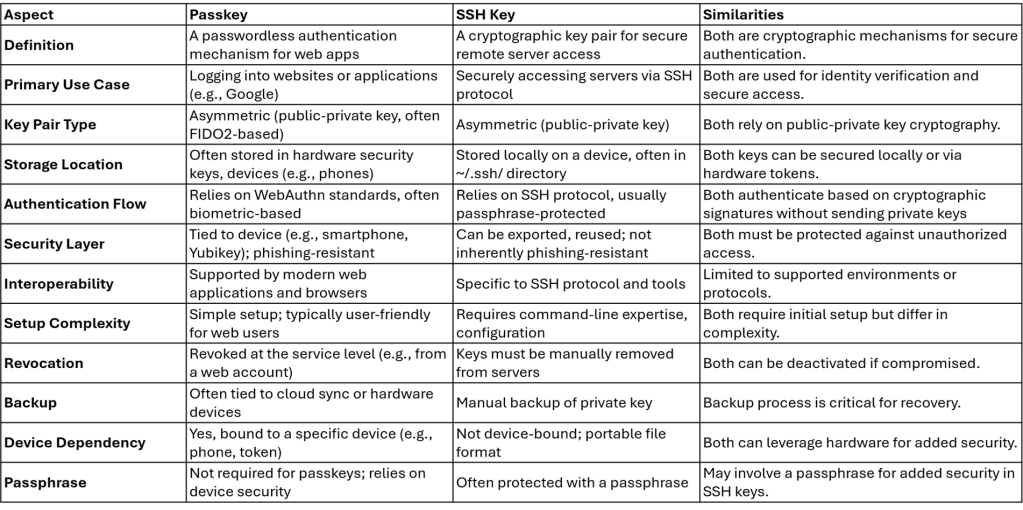

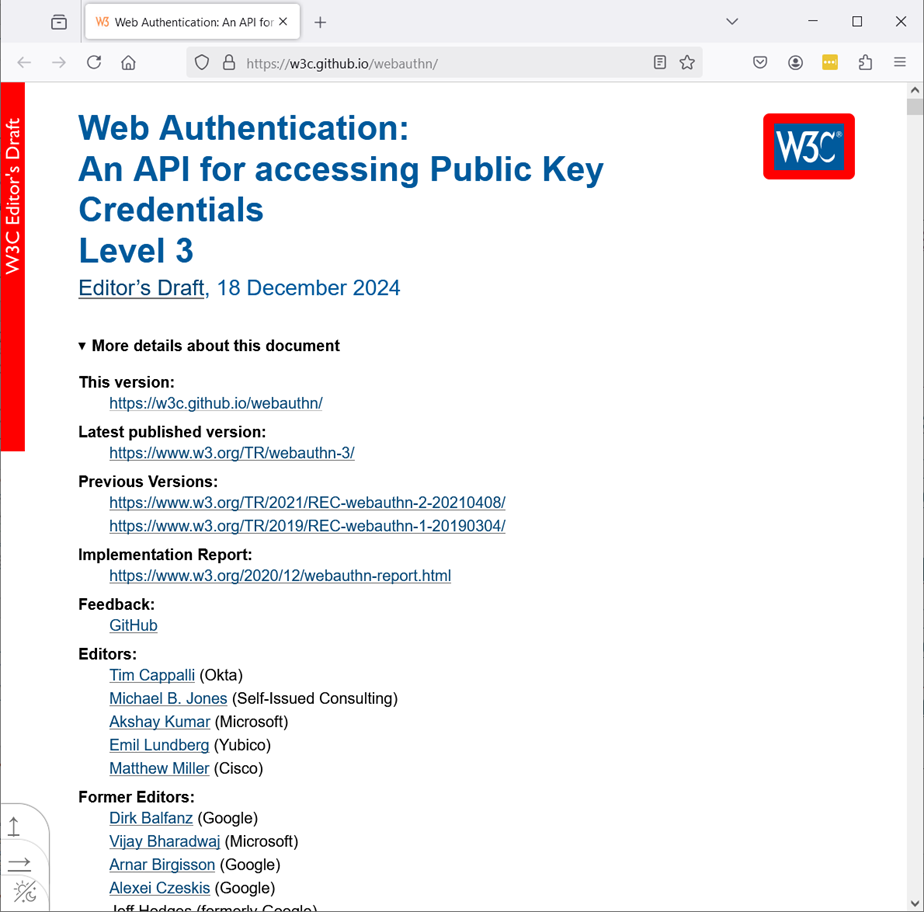

The “magic” or brilliance behind this innovation lies in the collaborative efforts of the industry at large, spearheaded by the FIDO Alliance, to establish a unified “standard.” This unified standard leverages public/private key functionality in a way that is user-friendly, making it easier for individuals to secure their remote accounts.

This new standard is already compatible with browsers on laptops and mobile devices. However, because it’s still relatively new, adoption among banks and financial institutions has been limited. For instance, I currently have only one financial institution using this standard. Most organizations still rely on two-factor authentication methods, combining a password with either an SMS code or an authentication app. That said, major global companies like Google and Microsoft are leading the charge in rolling out passkeys, and it’s only a matter of time before broader adoption follows.

How this works?

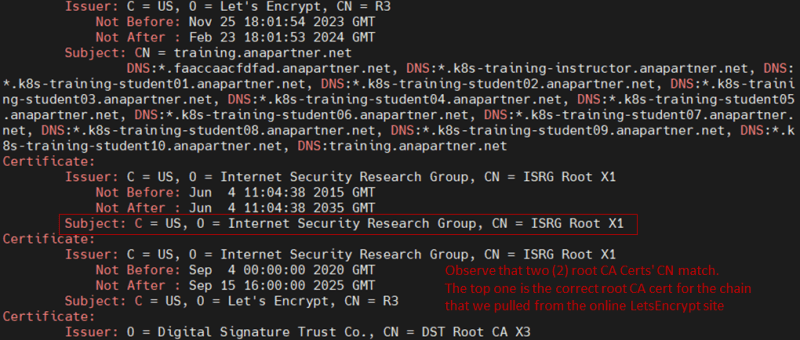

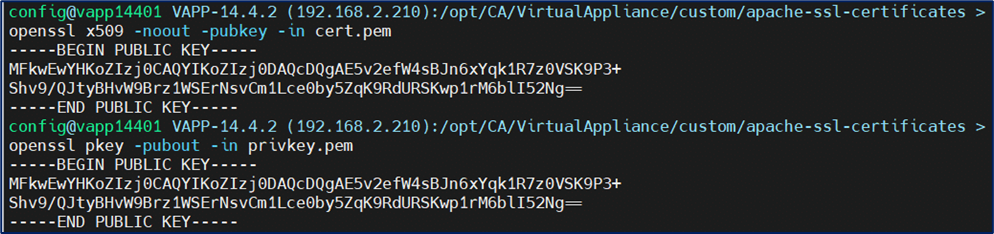

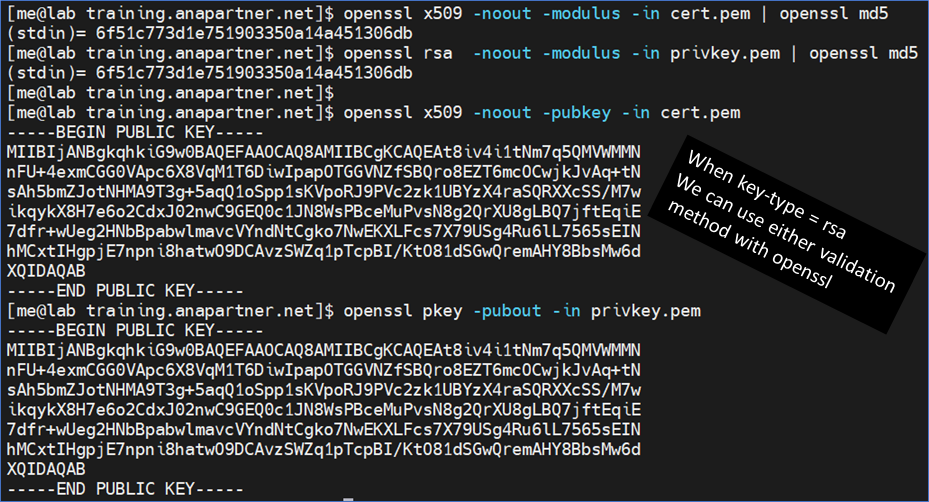

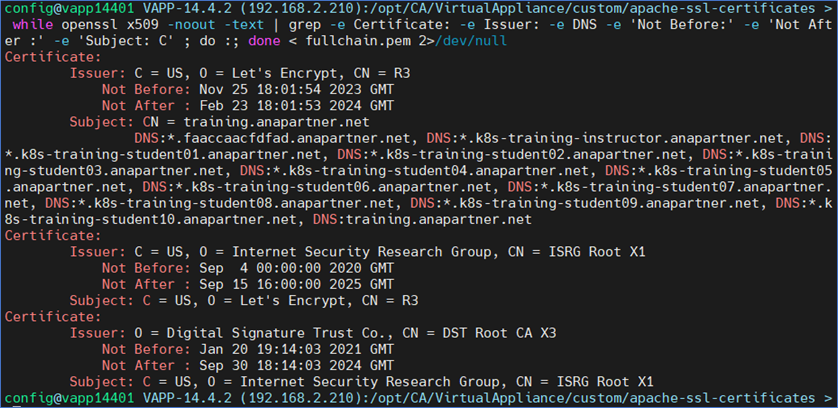

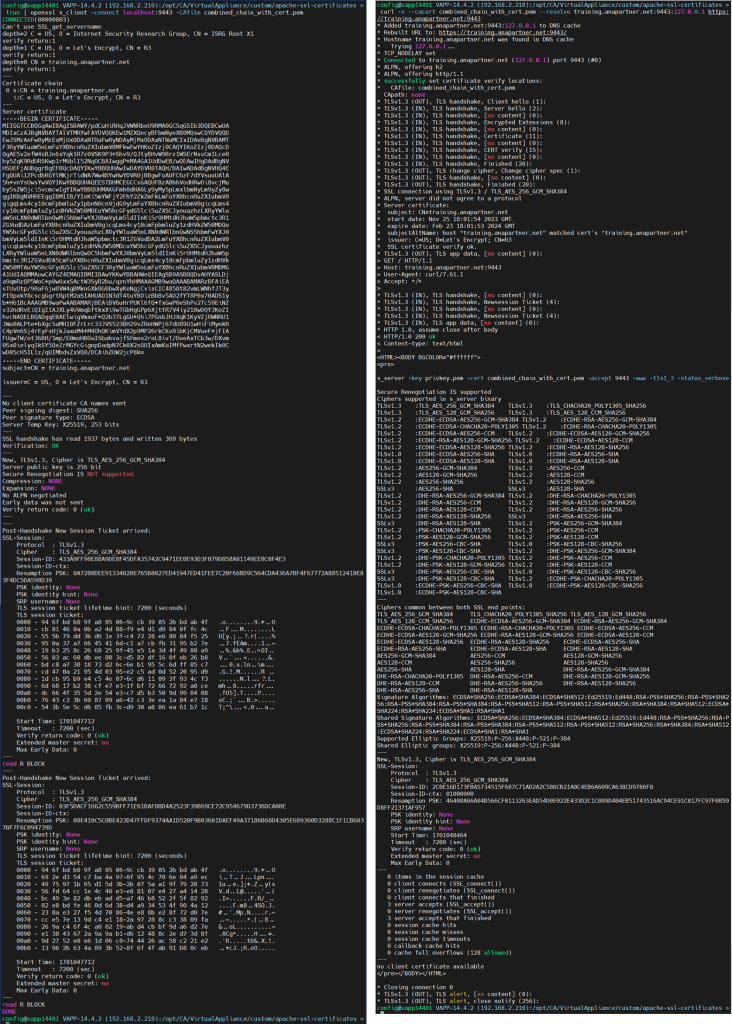

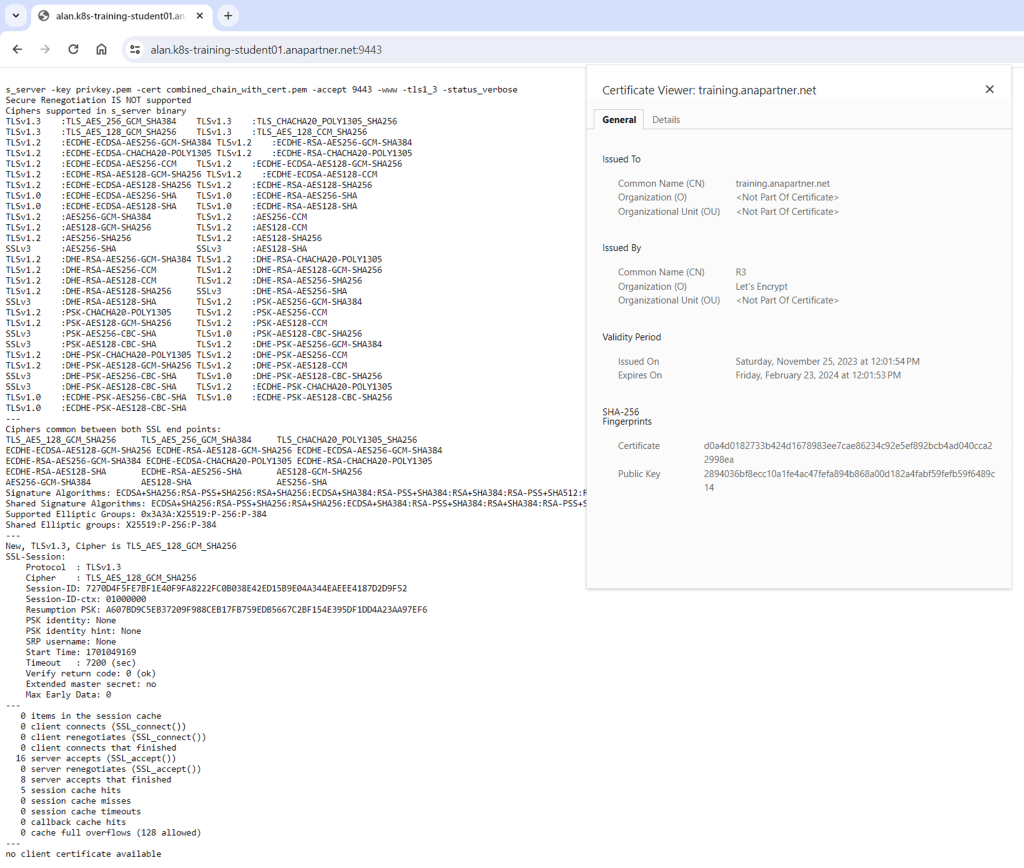

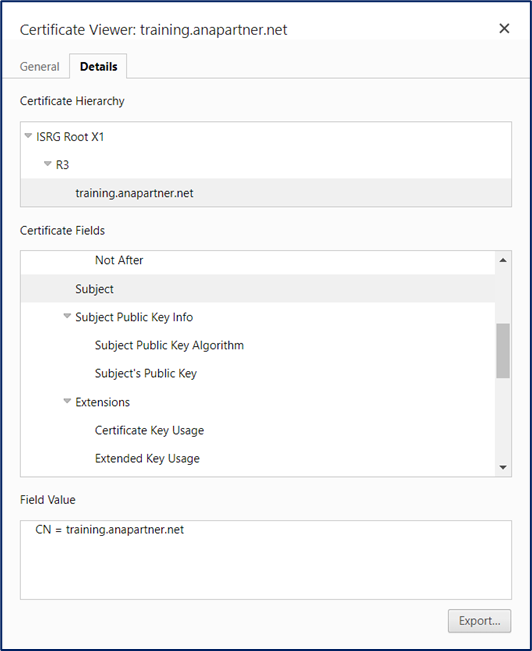

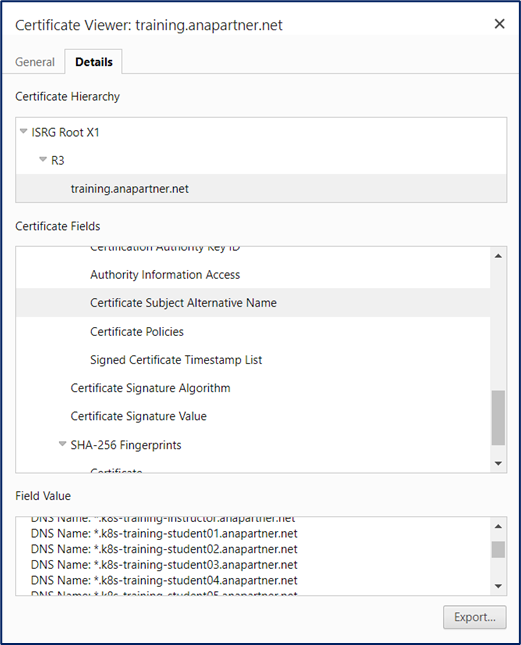

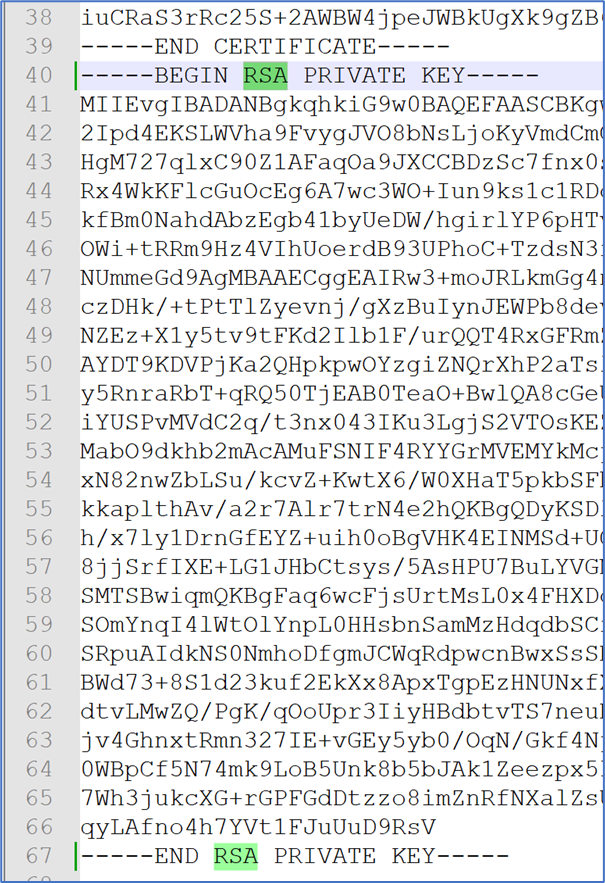

Background: The public-private key process (asymmetric cryptography) has evolved significantly, especially between 1990 and 2010, to enable secure online access, such as shopping and banking. Here’s how it works: A shopping or banking website hosts its public certificate (visible to anyone). When you access the site, it uses its private key (accessible only to the site) to validate this certificate. If the public and private keys match, the connection is secure, and you can confidently proceed with your transactions.

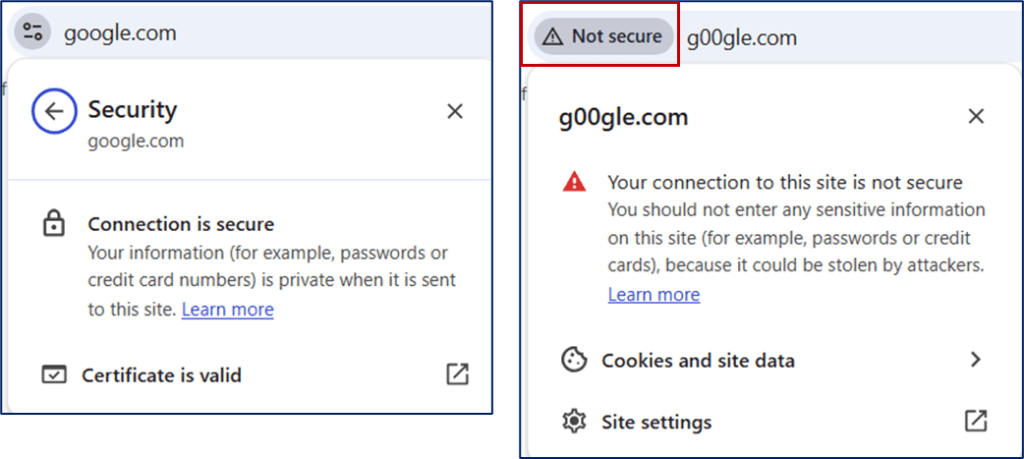

New Phishing Risks: Cyber-criminals (aka “bad-guys”) have become adept at creating convincing fake websites with their own public/private keys, paired with stolen images and content to appear legitimate. For years, we’ve been trained to trust the lock symbol in the URL bar as an indicator of a secure connection. However, this is no longer foolproof. For instance, a malicious site could use subtle tricks, like replacing the letter “o” with a zero in “google.com,” to deceive users. These tactics highlight the need to go beyond basic visual indicators and “raise the bar” on security to ensure our online activities remain safe.

Raise the Bar: With passkeys, the private key stays securely on your device (e.g., phone, workstation, physical security key, or tablet), while the public certificate is stored exclusively on the site where you registered. Authentication can only occur when the public certificate and the private key’s signature match, rendering interception attempts useless. This significantly raises the security “bar” against malicious remote access attempts.

That said, passkeys are still relatively new to global adoption, and their rollout is ongoing. While there are challenges, such as addressing backup and replication, these should not deter you from leveraging this technology to enhance your personal security.

Register a ‘passkey’ with your mobile phone.

Why? We want the bigger screens of private/public laptop/workstations and the security of the ‘passkey’ functionality with mobility.

If you are accessing a website from your phone, you will have no issue registering the passkey, because all components on your phone are trusted with each other, e.g. hardware chip to store the passkey (private key), the middle-ware software (SamSung Pass/Google Passkey/Apple Passkey), and your mobile browser.

The current challenge is using your mobile phone with your private laptop/workstation. This seems to be a “glossed” over area in current online docs. There is the assumption that all users have tied their “mobile phones” with their current private workstation. Unfortunately, this is a “gotcha” with using ‘passkeys”

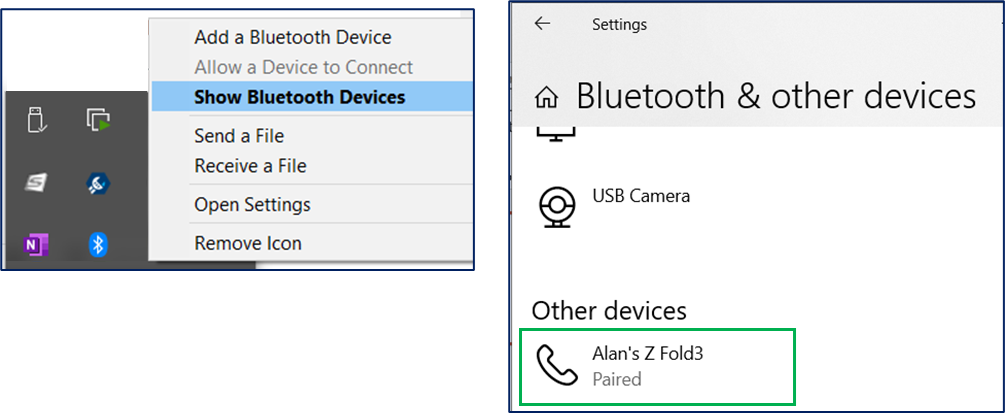

Per the standard, Bluetooth is used as the primary communication between your mobile phone and the public/private laptop/workstation.

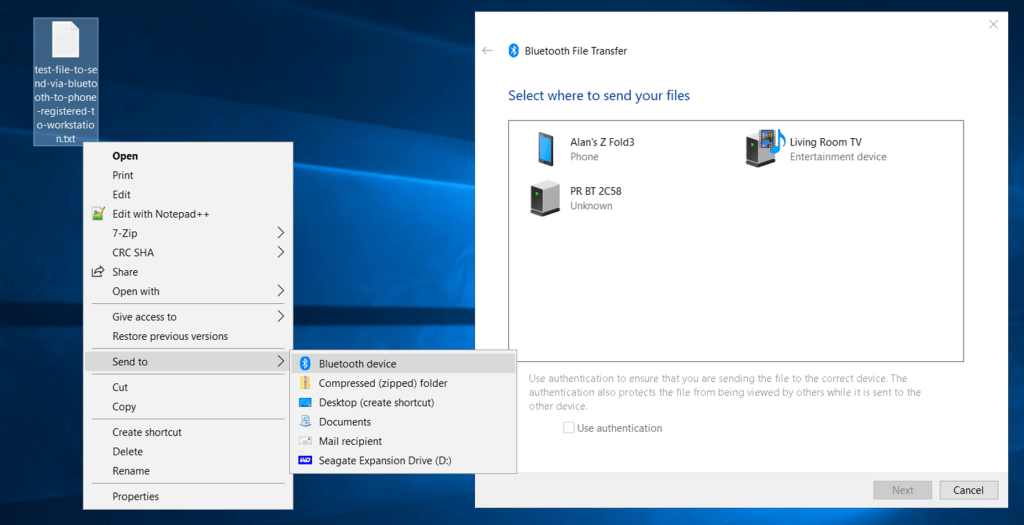

You can check if you have Bluetooth setup on your private laptop/workstation by using the built-in file copy operation. Below is a view of using this feature.

The mobile phone does have to advertise itself via Bluetooth first. The mobile phone does NOT have to be trusted first with the workstation. The operation can be approved when it occurs. This is similar how ‘passkey’ will work with your workstation (public or private).

For registration with ‘passkey’ , I have found through trial-and-error, that what worked best, was to previously trust (aka “paired“) the mobile phone as a Bluetooth device first. Add & pair your mobile phone, as you would your keyboard or mouse that has Bluetooth functionality.

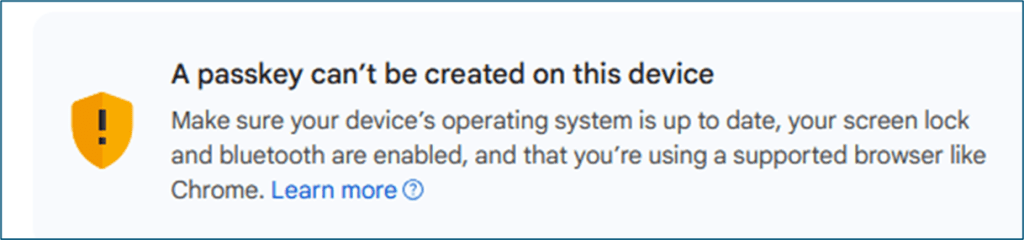

Ignore these false messages – Choose another device

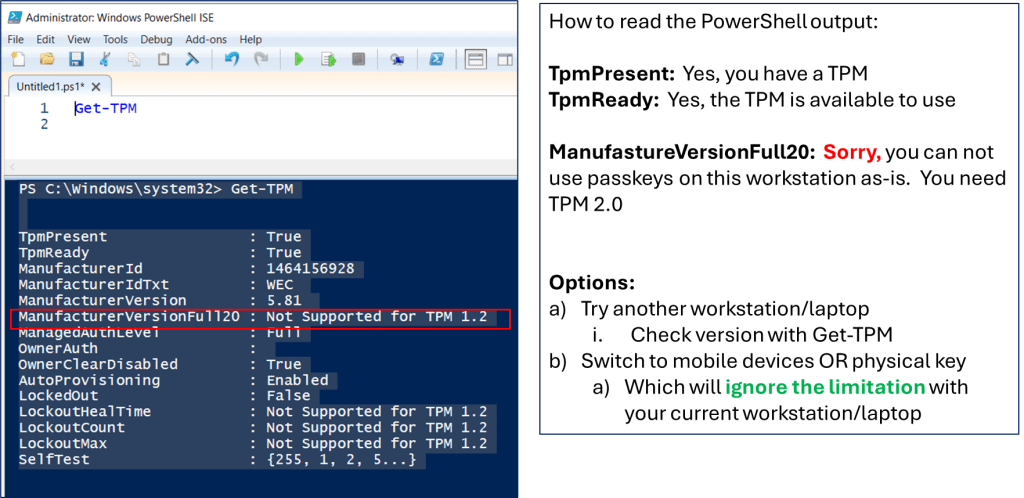

Now, when you hit a site via a browser on your workstation, you can now continue even if you see a negative message that states “A passkey can’t be created on these device”. These message are only focused on your workstation (if it alone can support the ‘passkey’). We don’t care about these workstations at this time. Especially, if you are eventually going to be traveling and want the passkey on your mobile phone.

Look for the button or message that states “Use another device” or similar message.

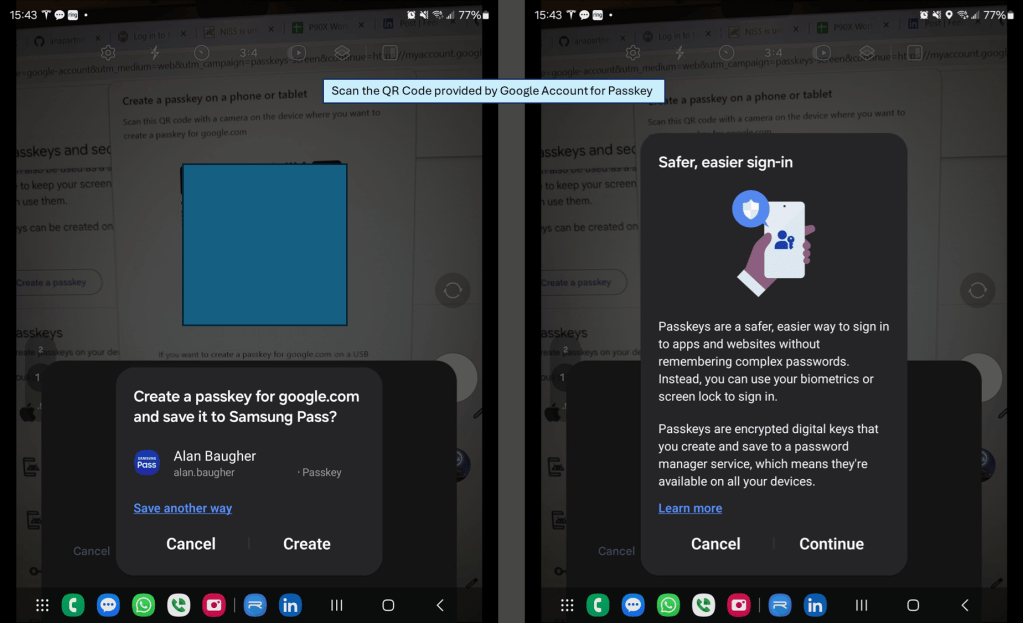

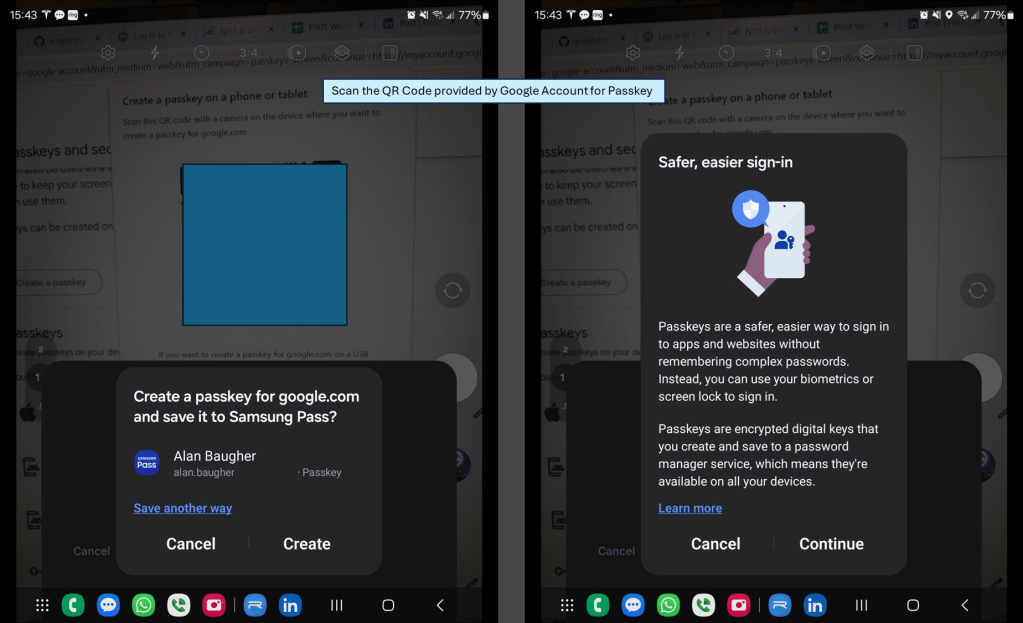

Example of ‘passkey’ registration. After you select your mobile phone, most websites will then offer a QR code to generate a new unique private-public key combo (aka the ‘passkey’) only for your account on their site. Use your phone, that has previously been Bluetooth paired to your private laptop/workstation, to register the ‘passkey’.

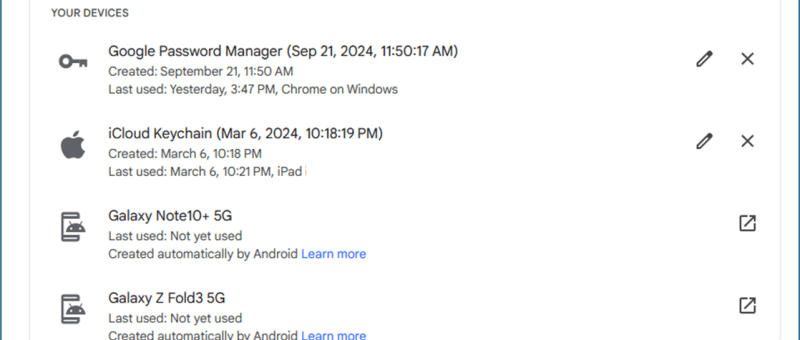

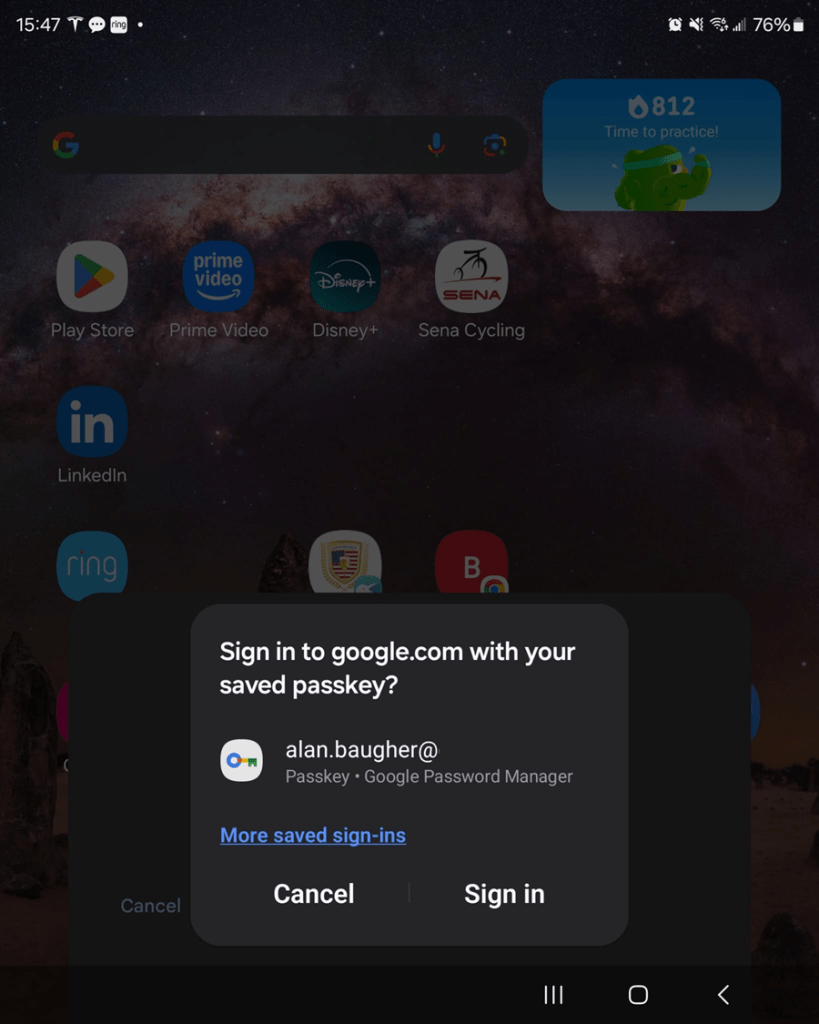

After you have registered the ‘passkey’ you can now leverage it from both your mobile web site, as well as from any workstation/laptop, including public workstations. You should see a popup box on your mobile phone via Bluetooth to authenticate with your ‘passkey’

Now, even if you still get a phishing email, and you inadvertently click on it. When the false-site asks for a password and you know you have a ‘passkey’, you can stop the process in its track.

Please be aware, that your original password is still on the proper valid site. It has NOT been removed due to you adding a ‘passkey’ for authentication. If you lose your mobile phone, you most likely will need to re-register with a new ‘passkey’ with a new mobile phone. The FIDO alliance is working with vendors to allow possible recovery, but some may argue that while useful, it defeat the purpose of ‘passkey’ that ONLY you have access to. You can catch up on what the latest recommendations are.

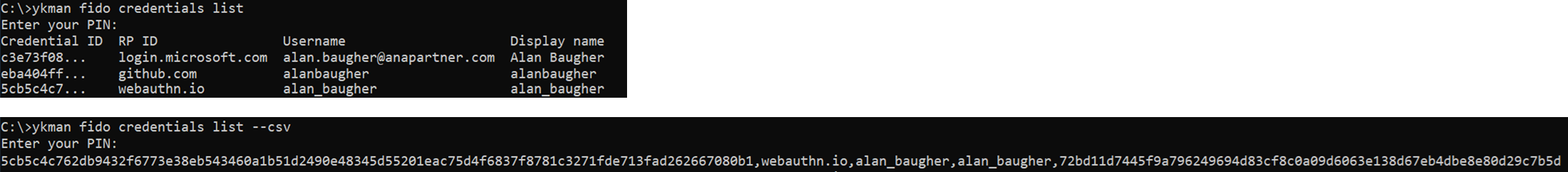

As my backup to my mobile phone, I am a fan of the YubiKey 5C NFC. This model has room for 100 ‘passkey’s and with the USB-C and NFC features, I can use it with any mobile phone or laptop. Adding a long PIN as well, so it fits the “something you know” + “something you have”. Similar to a mobile phone as the holder of the ‘passkey’.

Yubikey’s long info-graph document about the differences between ‘passkey’ types was useful.

Help your family, friends, and neighbors get on this ‘passkey’ band wagon.

Hopefully, knowing how to manage the Bluetooth ‘gotcha‘ for registration, will allow you to use your mobile phone more effective with your laptop. You can still use Authentication Apps + ‘password’ as your intermediate authentication until your financial institutions and others switch over to offer ‘passkey’ as an option.

To learn more you may wish to review the longer blog entry.

Benefits of Passkeys: Stop Man-in-the-Middle / Phishing Attacks – ANA Technology Partner

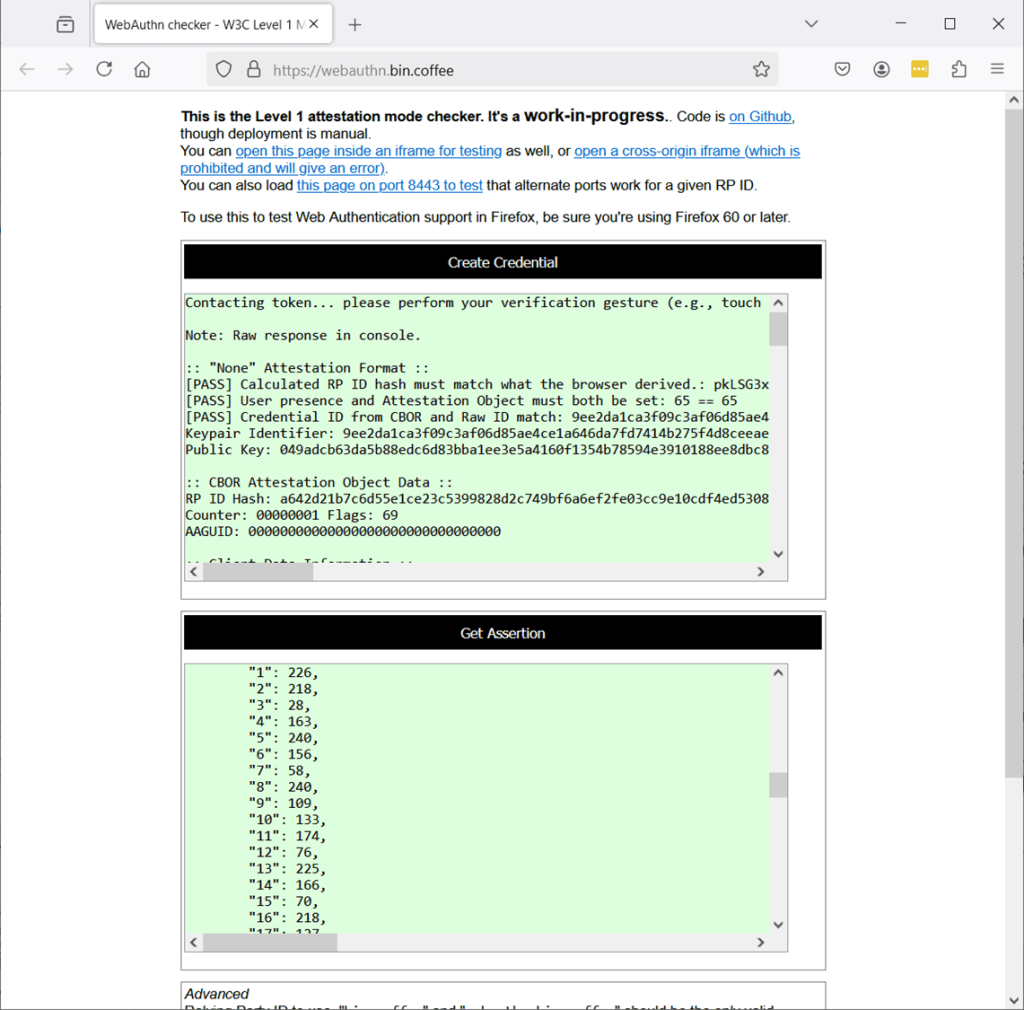

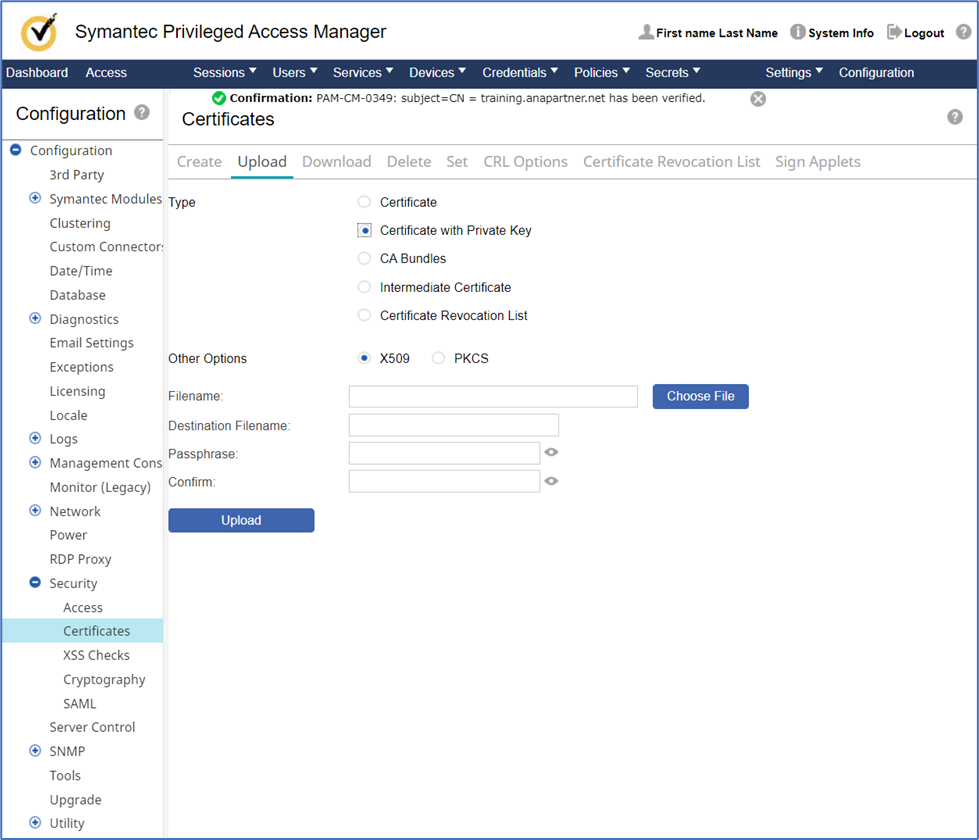

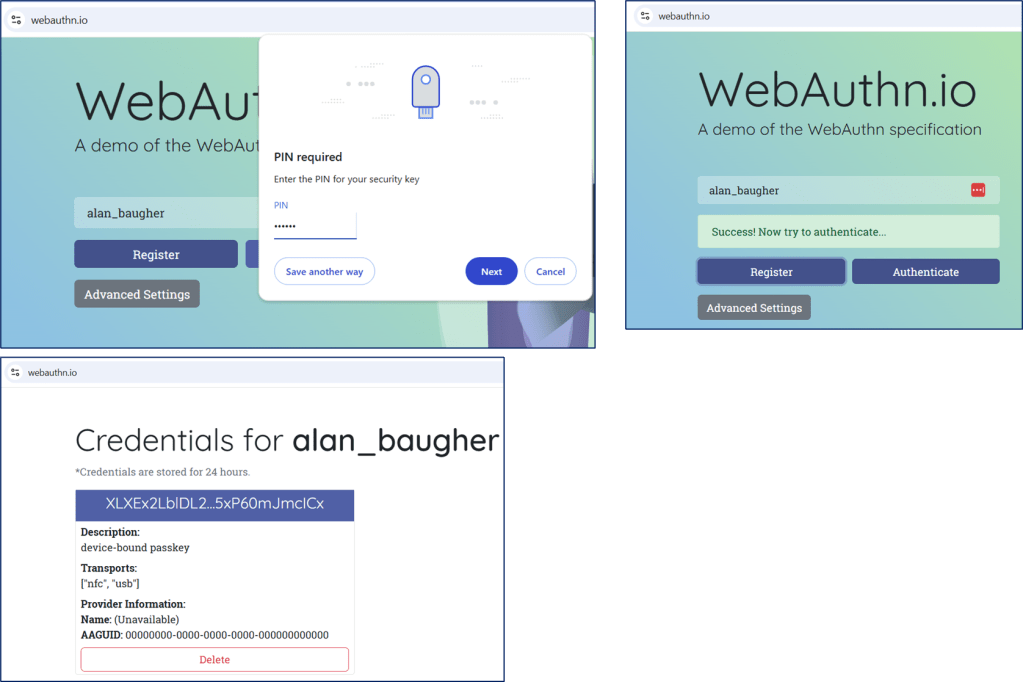

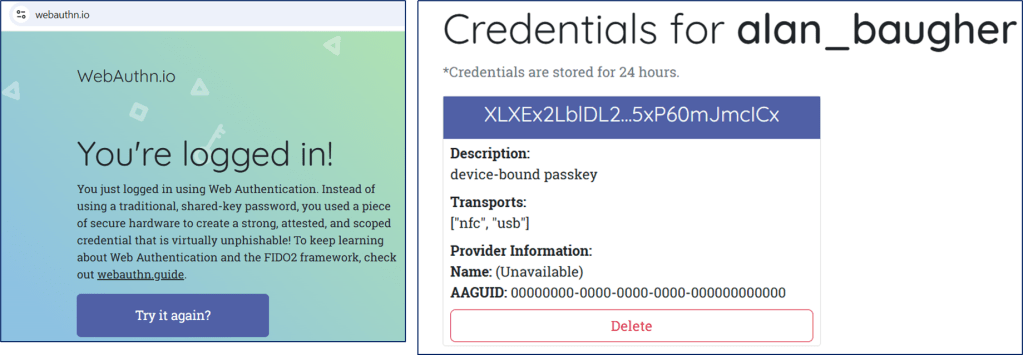

Optional: Testing with an external site

A useful site to test ‘passkey’, webauthn.io.

After creating your ‘passkey’, use the website again, but do not enter your username. Test with Authenticate button to let the browser work with your workstation, to pick a passkey associated with the website.

A view of the ‘passkey’ on a Yubikey via command line tool (ykman):

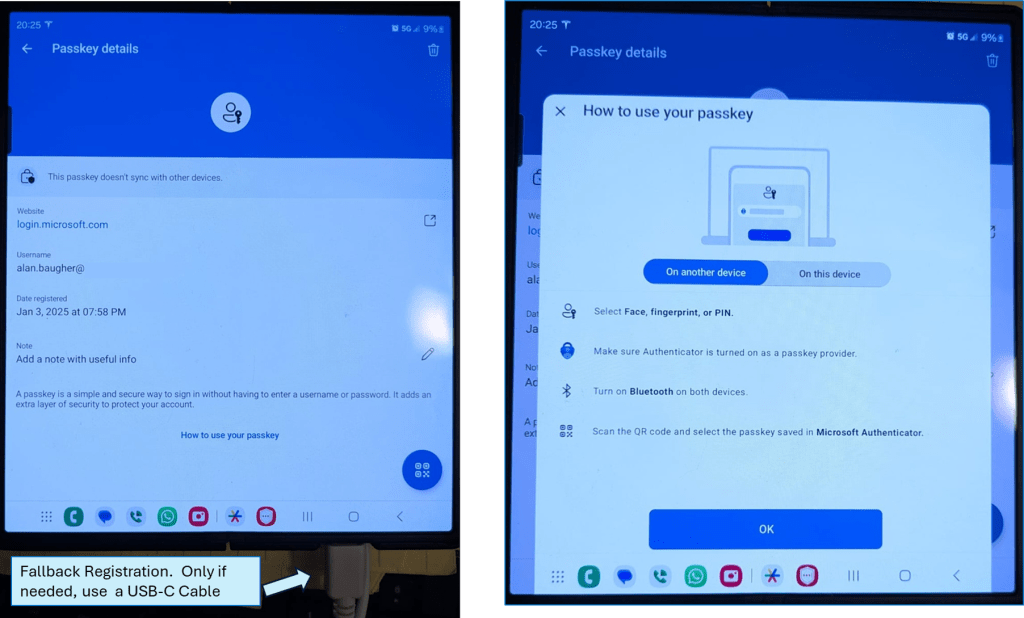

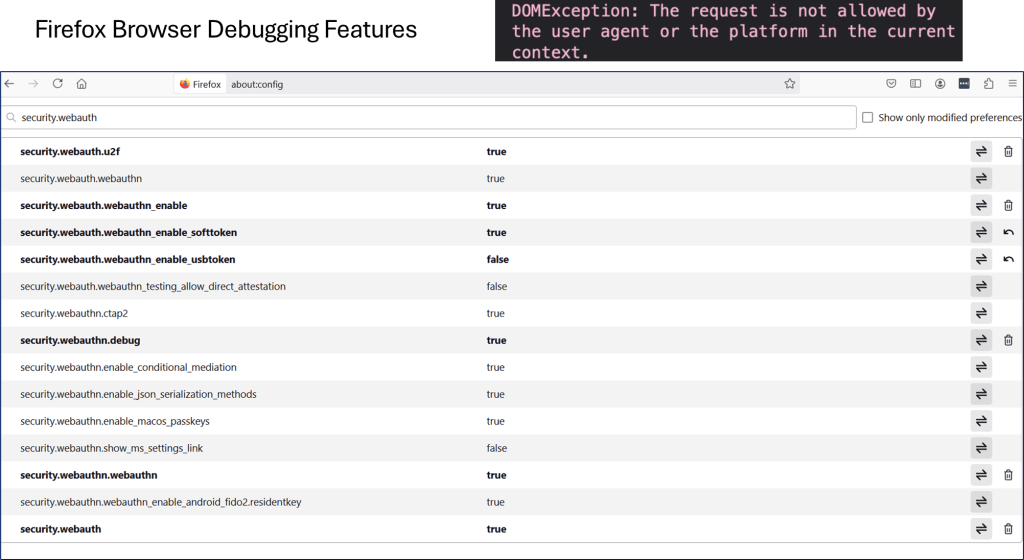

Fallback Registration – USB

If you still have an issue with registration with Bluetooth, fall back to using USB-C on your mobile phone connected to your private workstation/laptop. Please ensure that you do have a “passkey” management middle-ware app on your phone enabled to work with the vendors’ website.

Example: Microsoft seems to prefer their Microsoft Authenticator to hold the “passkey” associated with your Microsoft email account (work or school or otherwise). The below image show I have many choices for the middle-ware application. OOTB on my Samsung mobile phone is Samsung Pass. But you can change this at will when you are registering your “passkey”. Recall that the actually “passkey” will still be stored in the hardware of the mobile device.

Within Microsoft Authenticator, when you select your MS email address (work/personal), you should be able to see a “passkey” option. My previous attempts at registration using Bluetooth failed, but when I used the USB-C cable, then I had no issue.